Environment

- Kubernetes 1.26, Kubernetes 1.27

- Rancher 2.8 or later

Situation

- You have upgraded your Rancher cluster from Kubernetes <= 1.26 to a version >= 1.27.

- When pulling Docker images from a private AWS Elastic Container Registry (ECR), you receive the error

ErrImagePull (rpc error: code = Unknown desc = Error response from daemon: Head https://xxx.dkr.ecr.eu-central-1.amazonaws.com/v2/package/app/manifests/555: no basic auth credentials). - Your AWS credentials (access key and secret key) are still valid and the EC2 IAM instance roles have not changed in the meantime.

Root cause

To keep Kubernetes' core clean, it is an ongoing process of the Kubernetes project to remove cloud provider-specific code. Those in-tree code is migrated to external projects which can be indepentently developed and installed. An out-of-tree providers is for example cloud-provider-aws for AWS.

Up to and including with Kubernetes 1.26, the in-tree provider has been responsible for resolving ECR credentials. To download container images from private ECRs, you cannot use the AWS access key or secret key as HTTP Basic Auth credentials. Instead, you have to use your AWS credentials to request an ECR authorization token via the AWS API. That token is then used to do HTTP Basic authentication.

KEP-2133 provides the new credentials API for container registries and also removes the existing in-tree provider. As KEP-2133 is part of the Kubernetes release 1.27, Kubernetes can no longer resolve the ECR authorization token and uses wrong/empty credentials instead.

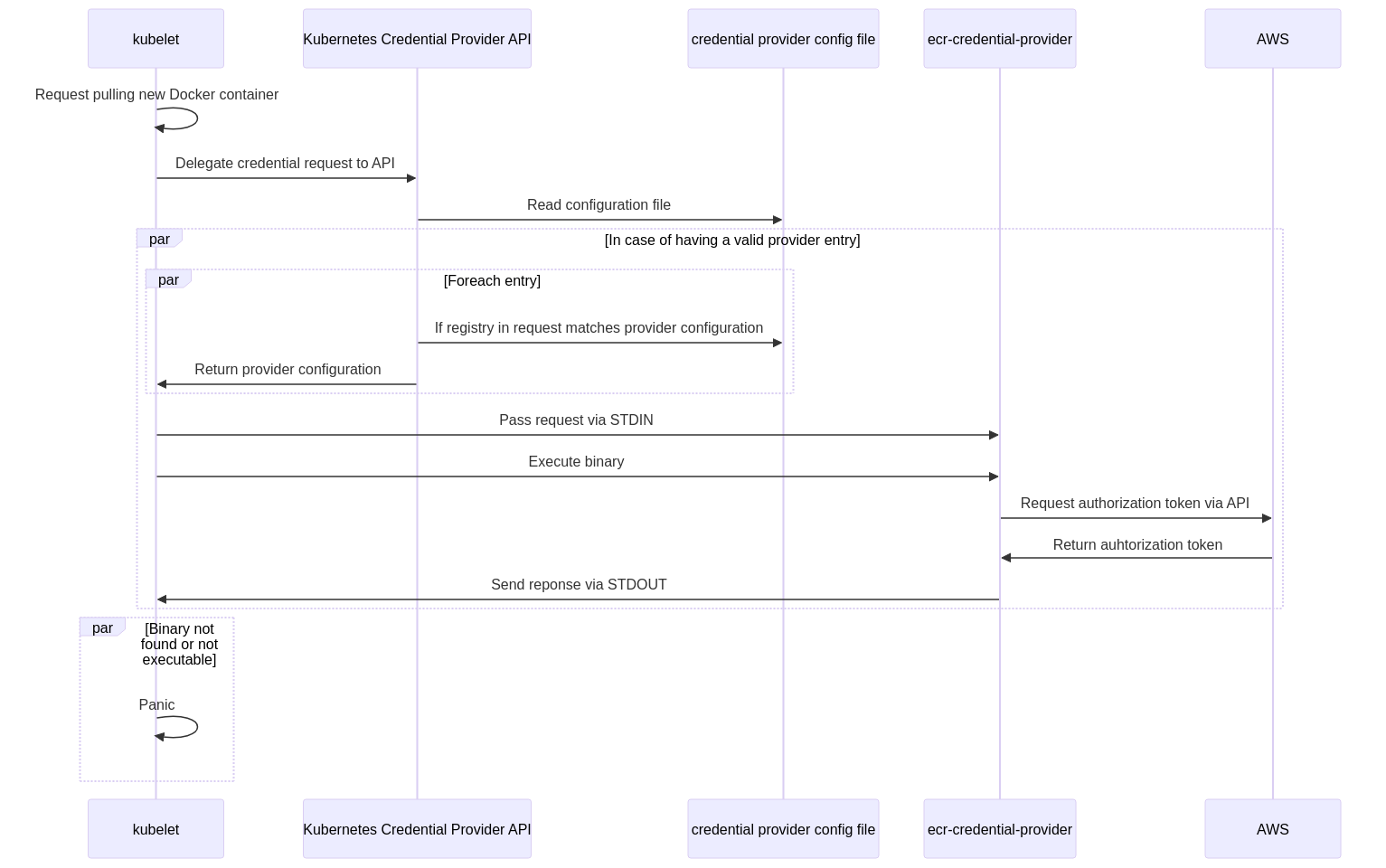

KEP-2133 extends the kubelet binary with the command line arguments --image-credential-provider-config and --image-credential-provider-bin-dir. Those arguments are used when a registry credential has to be resolved. --image-credential-provider-config specifies the configuration file containing all providers with the matching registries. --image-credential-provider-bin-dir specifies the directory in which the providers can be found.

Each provider must be its own binary/executable. When kubelet resolves a credential, it reads the specified configuration file and reads the provider name. It then looks into the image-credential-provider-bin-dir if this provider/file exists. If it exists, it executes the binary by passing the credential request as YAML via stdin. The binary must response with the defined YAML structure via stdout.

Solution

The ecr-credentials-provider from cloud-provider-aws project must be installed on each node in the cluster. Then, the Rancher cluster.yaml has to be modified so that the arguments image-credential-provider-bin-dir and image-credential-provider-config are passed to kubelet.

Install the credentials provider for ECR

On each node, execute the following script:

mkdir -p /opt/aws/{bin,config}

# see https://github.com/kubernetes/cloud-provider-aws/blob/master/Makefile

# download binary

curl -OL https://storage.googleapis.com/k8s-staging-provider-aws/releases/v1.30.2/linux/amd64/ecr-credential-provider-linux-amd64

# move it to the mount location and make it executable

mv ecr-credential-provider-linux-amd64 /opt/aws/bin/ecr-credential-provider

chmod 755 /opt/aws/bin/ecr-credential-provider

# default configuration

cat <<EOT >> /opt/aws/config/custom-credential-providers.yaml

apiVersion: kubelet.config.k8s.io/v1

kind: CredentialProviderConfig

providers:

- name: ecr-credential-provider

matchImages:

# restrict it to your account's ECR

- "*.dkr.ecr.*.amazonaws.com"

- "*.dkr.ecr.*.amazonaws.com.cn"

apiVersion: credentialprovider.kubelet.k8s.io/v1

defaultCacheDuration: '0'

EOT

You can also directly download that snippet here.

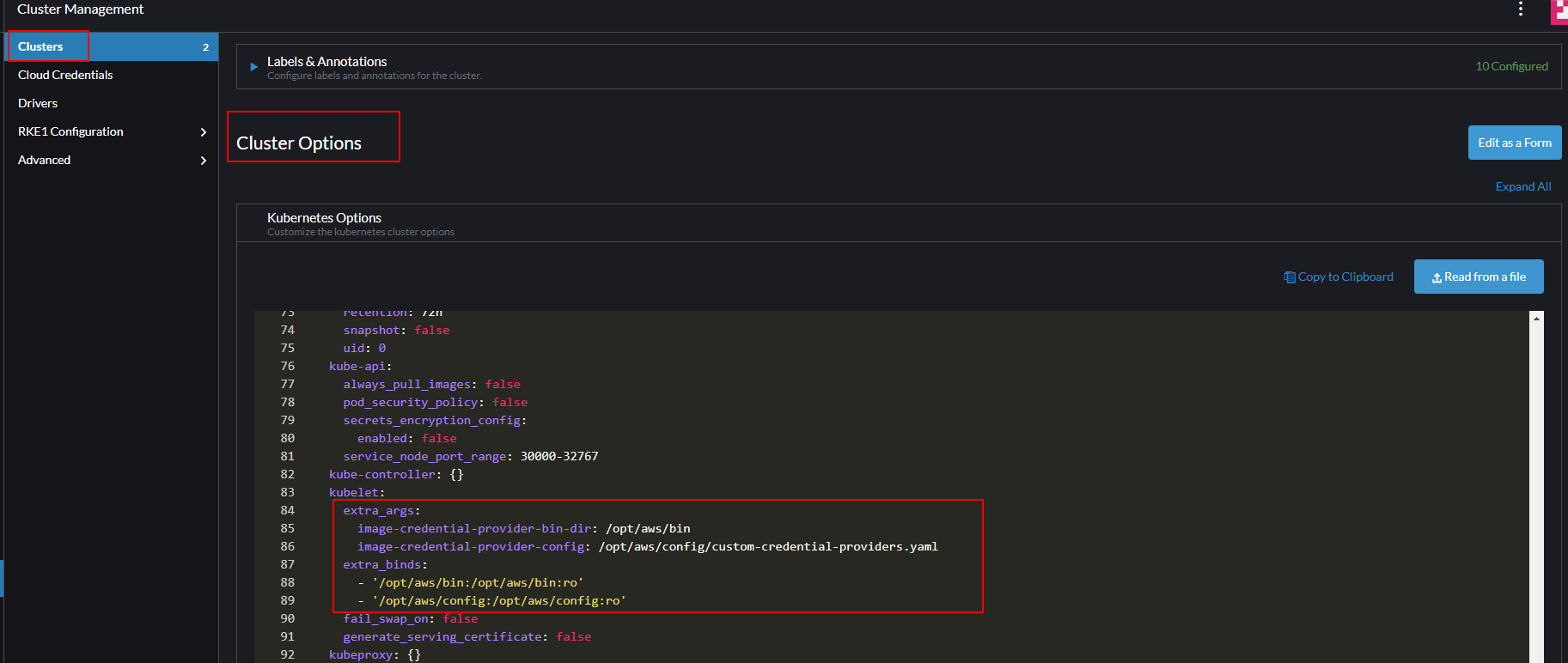

Update cluster configuration for kubelet arguments

In the Rancher UI go to Cluster Management > Clusters > ${DOWNSTREAM_CLUSTER} > Edit Config > Cluster Options > Edit as YAML.

Paste in the following YAML snippet inside the rancher_kubernetes_engine_config.services.kubelet section:

kubelet:

extra_binds:

# mount recently created bin and configuration directory

- '/opt/aws/bin:/opt/aws/bin:ro'

- '/opt/aws/config:/opt/aws/config:ro'

extra_args:

# pass arguments to credential provider.

- 'image-credential-provider-config': '/opt/aws/config/custom-credential-providers.yaml'

- 'image-credential-provider-bin-dir': '/opt/aws/bin'

extra_args are not persisted. Try to change the order of the YAML properties above (extra_args before extra_binds). That should do the trick.

kubelet has to be restarted.

Check on each of your cluster's nodes that the arguments have been passed

$ ps aux | grep kubelet

root 760664 3.3 2.5 2223684 98952 ? Sl 06:40 0:03 kubelet --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock <...omitted> --image-credential-provider-config=/opt/aws/config/custom-credential-providers.yaml --image-credential-provider-bin-dir=/opt/aws/bin

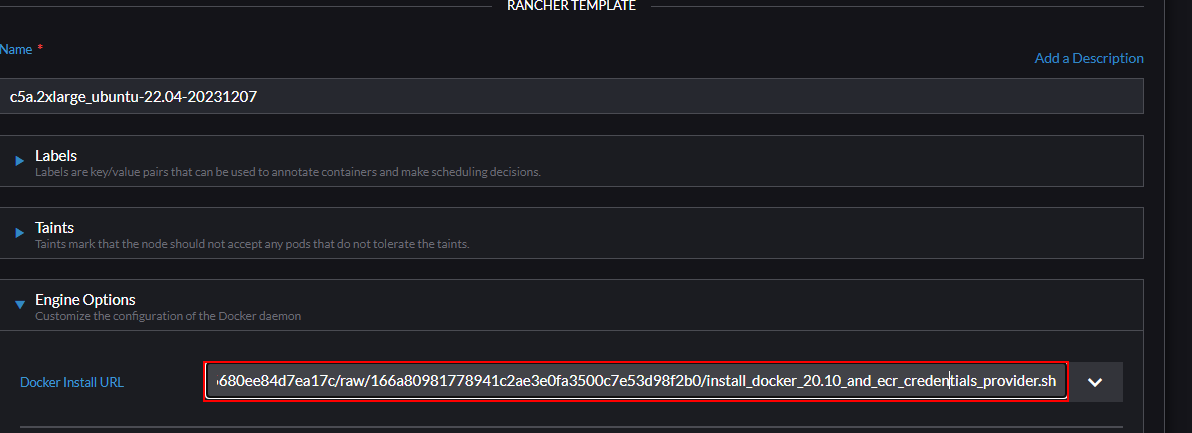

Bonus points: Deploying the binary and configuration for each new node

The current version 2.8.4 of Rancher does not allow you to specify any deployment specific scripts. That means that you can neither specify a custom cloud-init file nor a user data section for EC2 instances.

If you do not want to bake a custom AMI, for a Rancher Node Template you can create a custom wrapper script the Docker installation: In the Rancher UI go to Cluster Management > RKE1 Configuration > Node Templates > ${YOUR_NODE_TEMPLATE} > Edit > Last wizard page > Rancher Template > Engine Options. Change the Docker Install URL to your own script, which sets up the required files above. You can use the URL install_docker_20.10_and_ecr_credentials_provider.sh to make all the scripts above automatically work.